It’s been a few years since I’ve been able to attend Check Point Experience (CPX), the annual user conference held by Check Point in Europe and the US. This year, I attended the event held in Chicago and, according to Check Point Founder and CEO Gil Shwed, had close to 2,000 attendees. It’s definitely smaller than RSA Conference, a more general security industry trade show that I also attended earlier this year. It is a bit more intimate, though, which is a good thing.

CPX runs for two days and has a combination of general sessions and smaller, more focused sessions covering individual products and services. A handful of customers also schedule one-on-ones with Check Point executives. There is also an expo floor where vendors have booths demonstrating their products and services. Check Point had a few booths for its various product and services offering, and yes, I had to stand at a couple of them to do my part for the cause.

While I always enjoy hearing what the Check Point executives have to say, the reality is, as a Check Point employee, I’ve heard a bit about what they had to say at our Sales Kick Off earlier this year. I was more interested in the other speakers at the general sessions, which at CPX, included a congressman, a futurist, and customers.

The guy from the Missouri State Police Department provided the most unique perspective, I thought. Not so much about the threats, though it was interesting to hear a bit about the Anonymous attacks they suffered during the Ferguson incident a couple years back. What stuck with me was the why, something we often don’t think a lot about. In this case, the “hacking” and “doxxing” Anonymous was doing in Ferguson was having immediate, real-world consequences to the very people entrusted with protecting citizens.

Information security for the Missouri State Police Department is about protecting these fine folks who protect our safety. A bit of a different goal from a lot of other organizations.

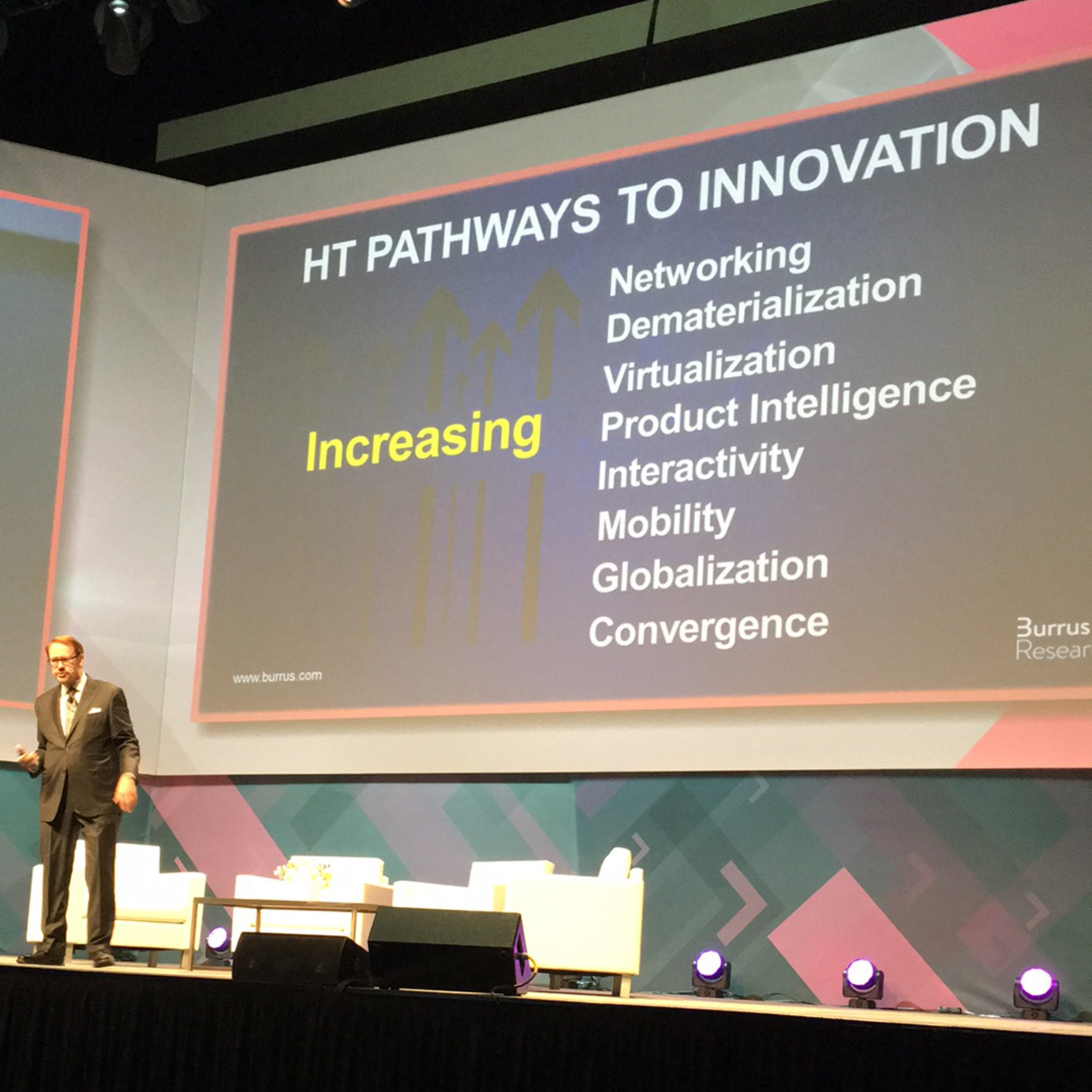

One of my favorite sessions was from Daniel Burrus, a best-selling author and futurist predicting the future for more than three decades. Lots of talk about hard and soft trends and using all that big data to look at the future instead of the past.

The Pathways to Innovation in the above slide were originally written in 1985. They haven’t changed. My favorite insight from his presentation? Rather than complaining about government regulations (a hard trend), look for the opportunities they present. They’re there.

And, of course, there’s Moti Sagey. I always enjoy his Sales Kick Off presentations about the competition and his CPX presentation did not disappoint. Even though he is part of Check Point’s marketing organization, his presentations are low on fluff and high on facts, with plenty of humor.

There were a few tracks of breakout sessions, which I have to admit I did not attend because I already knew a lot of the content in these sessions and I had to work some of the Check Point booths on our expo floor. The feedback I got from customers on the sessions were excellent and provided a lot of great information on new and recently announced products.

Other Vendors at CPX 2016 Chicago

Over the 23 year history of Check Point, a lot of former employees have gone on to start their own companies or work for companies started by former Check Point employees. One of the newer entrants in this space that had a booth at our expo was a company called Fireglass, which basically takes all of the code that runs in a browser and runs it somewhere else, exposing only visuals to an end user. It can also use Check Point’s SandBlast technology to handle file downloads, reducing the risk of zero-day malware entering the end user workstation. It’s extremely clever.

Another company involving former Check Point employees is GuardiCore, which has a clever solution for figuring out the traffic flows inside your virtualized environment so you can perform appropriate microsegmentation with vSEC. It can also identify rogue traffic flows–also a useful feature. For a bit more, check put Micro-Segmentation, the right way. Also, Product Manager Lior Neudorfer snapped a photo with me:

Indeni is a tool that monitors security devices like Check Point to, in their words, “power smarter networks through machine learning and predictive analysis technology, enabling companies to focus on growth acceleration rather than network failures.” They gave out happy face shirts–who can be unhappy wearing a happy face shirt?–and let you shoot Nerf guns at their booth. Looks easier than it is.

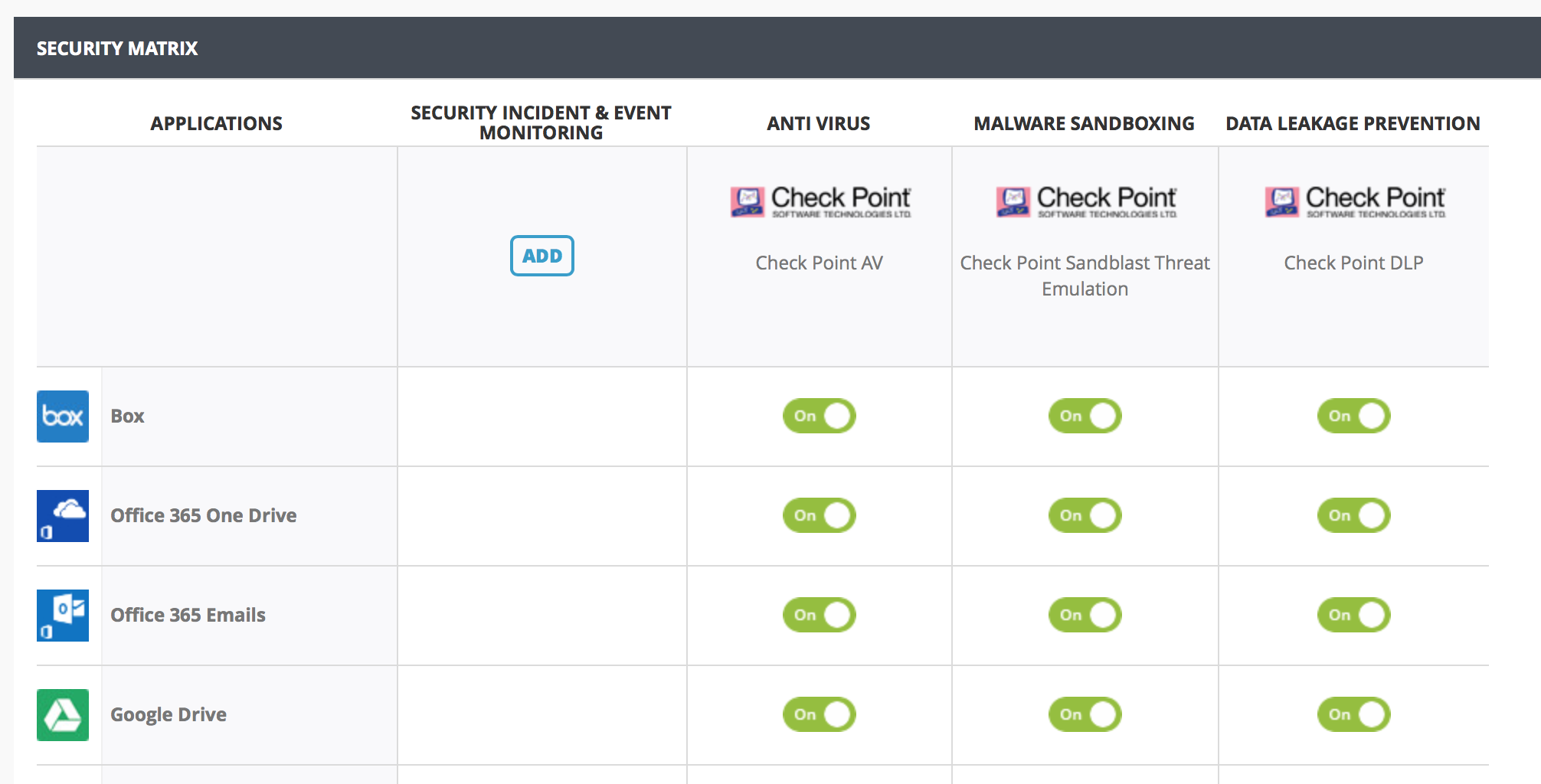

One other notable company was Avanan. They are a cloud access security broker (CASB), which sits between an organization’s on-premises infrastructure and a cloud provider’s infrastructure. CASBs integrate familiar security controls with SaaS applications to extend visibility and enforcement of security policy beyond on premise infrastructure. Avanan works with a number of security vendors, including Check Point. Specific to Check Point, they support Threat Emulation, Anti-Virus, and Data Loss Prevention with a growing list of SaaS applications such as Office 365, Google Enterprise, Box, and more.

There was a lot more to unpack from those two days in Chicago but that’s enough to give you a taste. If you use (or sell) Check Point products, I highly recommend attending next year to find out how Check Point and partners can keep you one step ahead of the threats.

Edited to add: I got Lior Neudorfer’s title wrong, he’s a Product Manager at Guardicore, not CEO. I also added a link to a Medium post about the product they showed me at CPX.

Disclaimer: If it’s not clear from the above, I work for Check Point. Hopefully it’s clear these are my opinions and Check Point’s official opinions may differ.