From Wikipedia:

Responsible disclosure is a computer security term describing a vulnerability disclosure model. It is like full disclosure, with the addition that all stakeholders agree to allow a period of time for the vulnerability to be patched before publishing the details.

While there is a fair amount of debate over what is considered a reasonable period of time to allow this to happen, or even sometimes what constitutes a vulnerability, most people I know in the industry generally agree that responsible disclosure is a good thing overall.

The responsible disclosure process allows the software and services we rely on every day to get better and more resilient to malicious actors who regularly look to subvert these systems for their own gain. As an employee of Check Point, I see both sides of this debate: as both a receiver of security vulnerability reports from the community and as a discloser of vulnerabilities to other organizations.

I’ve been directly involved with a couple of vulnerability disclosures related to Check Point products. While I can’t get into specifics, overall I believe issues are respond to quickly and appropriately.

To speak to the other side, Check Point does find and disclose vulnerabilities in third party products as part of its ongoing security research. Some recent examples include:

- Issues in Wordpress Core involving privilege escalation, SQL Injection, and page generation – patched in WordPress 4.3.1 and above

- “MaliciousCard” Vulnerabilities in WhatsApp which allowed remote code execution on victim computers

- A privacy issue in iOS versions prior to 9.0 whereby user credentials could be exposed on devices where the end user has logged out of their Apple ID on the device – it should be noted this was disclosed to Apple 8 months prior to the release of iOS 9.

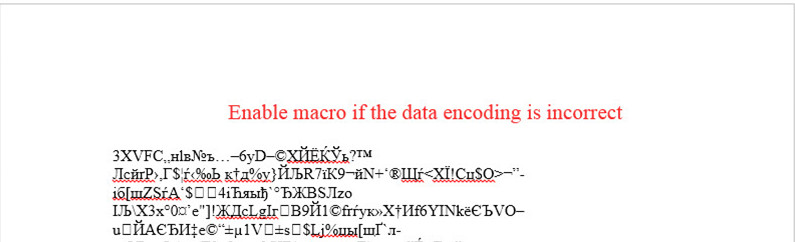

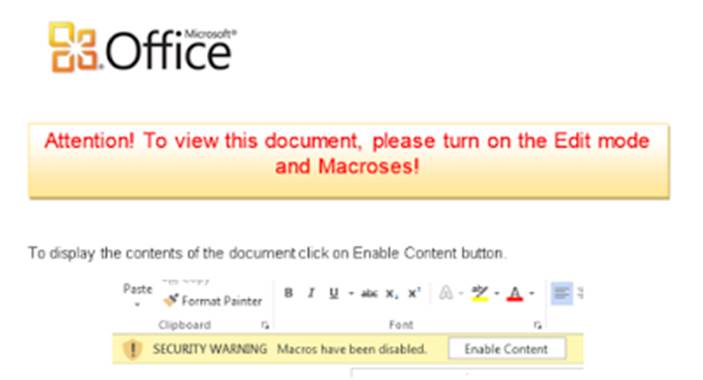

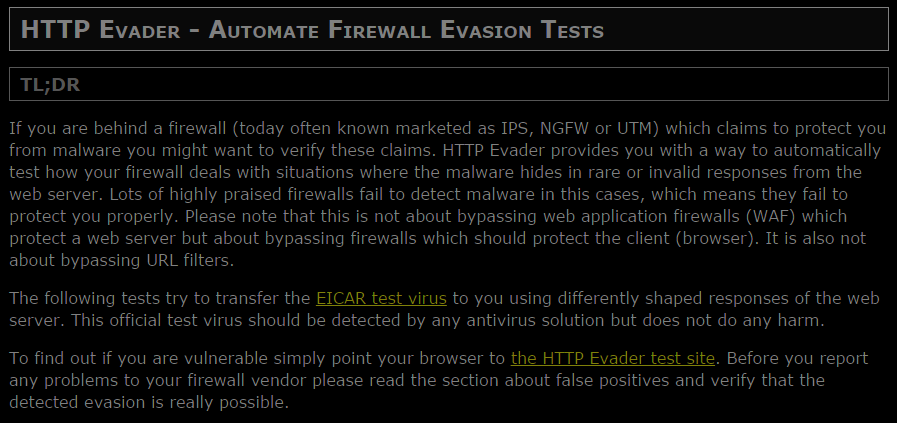

Check Point’s research includes products that compete with Check Point in the marketplace. The latest example is a complete block bypass in Cisco Firepower. You can see a proof of concept video here:

As noted at the beginning of the video, the disclosure of this issue happened back in November 2015 and was remediated by Cisco today (30 March 2016), 134 days after it was initially disclosed. Nothing was disclosed publicly by Check Point until this date. Check Point worked closely with Cisco PSIRT, who was cooperative and professional throughout the entire process.

While there may be some competitive benefit to this research into competitive products, it really speaks more to the fact Check Point wants to see better security for everyone, not just those who happen to be Check Point customers. I think Mahatma Gandhi said it best:

“The best propaganda is not pamphleteering, but for each one of us to try to live the life we would have the world live.”

Check Point is leading the responsible disclosure debate by example here. It’s one of the things that makes me proud to work for Check Point.